AI is Getting Rid of Testing Woes: The 9 Metrics That Show Us Why

Software testing is facing a revolution. Artificial intelligence isn’t just another flashy tool – it’s transforming how we ensure software quality. But how do you know if your AI-powered testing is hitting the mark? If you don’t have the right metrics, you’re flying blind. Let’s cut through the hype and get down to the KPIs (Key Performance Indicators) that truly illuminate the success of AI-driven software testing.

Why Traditional Metrics Just Won’t Cut It

Sure, classic testing metrics still matter. Things like test coverage, defect rates, and all those old favourites have their place. They’re like checking the oil in your car – important, but they won’t tell you if the fancy self-driving system is working. Here’s where traditional metrics fall short with AI-driven testing:

Complexity: AI test models can consider mind-boggling numbers of variables and potential scenarios. Traditional metrics can’t capture that complexity.

Adaptability: AI models are meant to learn and improve. Static metrics don’t reflect that dynamic growth.

Focus on Outcomes: AI testing aims for better outcomes, not just ticking off tasks. We need KPIs that tell the ‘Did it work?’ story.

The 9 AI-Powered Testing KPI Metrics That Matter

Let’s dive into the metrics that give you an insightful view of your AI testing efforts:

1- Test Coverage Beyond the Usual Suspects

Traditional code coverage is a start, but AI goes way deeper.

Data Coverage: How diverse is the data your AI model trains on? This is key for reliability in real-world situations.

Scenario Coverage: AI test generation can uncover corner cases humans never dream of. Measure how many new scenarios AI helps you test.

Pro Tip: Visualize coverage, with heatmaps showing areas where AI excels vs. where you might need to boost it.

2- Defects Escaping into the Wild (Ouch!)

The ultimate goal is to find nasty bugs before users do. ‘Defect escape rate’ is still the boss metric. But with AI, you can slice it in new ways:

Types of defects AI misses: Patterns here tell you where your models need tweaking.

Defect severity vs. AI: Did AI catch the high-impact stuff, even if it missed some minor ones?

3- AI Model Accuracy vs. Reality Bites

Don’t fall for models that ace synthetic tests but flop in production. You need:

Precision: What percentage of AI-flagged issues are actual problems? Avoid false alarms.

Recall: Of the real problems out there, how many does your AI find? Higher is better. Pro Tip: Track accuracy over time.

A rising trend means your model’s learning, not just getting lucky.

4- But Not All Automation Is Equal

AI’s superpower is automating the mind-numbing parts of testing. Measure the impact:

Test generation efficiency: How many new test cases does AI create per hour, across different complexity levels?

Maintenance savings: How much less time do you spend fixing flaky, outdated manual tests, thanks to AI?

5- Time is Money: AI-Driven Speed Boost

Fast feedback loops are testing heaven. AI can shine here:

Time to first meaningful test: How quickly does AI generate tests covering your riskiest areas?

Regression speedup: How much faster does AI run your full regression suite vs. a human-only approach?

Pro Tip: Don’t just measure speed in isolation. Quality still matters! Pair speed metrics with those from earlier sections.

6- The “Aha!” Factor: Insights Beyond Bugs

AI models can dredge up patterns humans miss. Measure this serendipity:

Unusual usage patterns: Did AI tests reveal how users stress your system in unexpected ways? This helps prevent future issues.

Performance bottlenecks: AI might find these during functional testing, aiding optimization way before load testing.

Pro Tip: This metric is qualitative at first. Track AI-driven insights and how they lead to product improvements over time.

7- Tester Happiness – The Unquantifiable Advantage

Is your AI testing tool a joy, or a pain? Don’t underestimate the impact on your team:

Ease of use: Can testers interact with AI without a Ph.D. in Machine Learning?

Trust: Do testers believe the AI-generated results, or is there constant second-guessing?

8- The ROI Question: Is AI Worth the Investment?

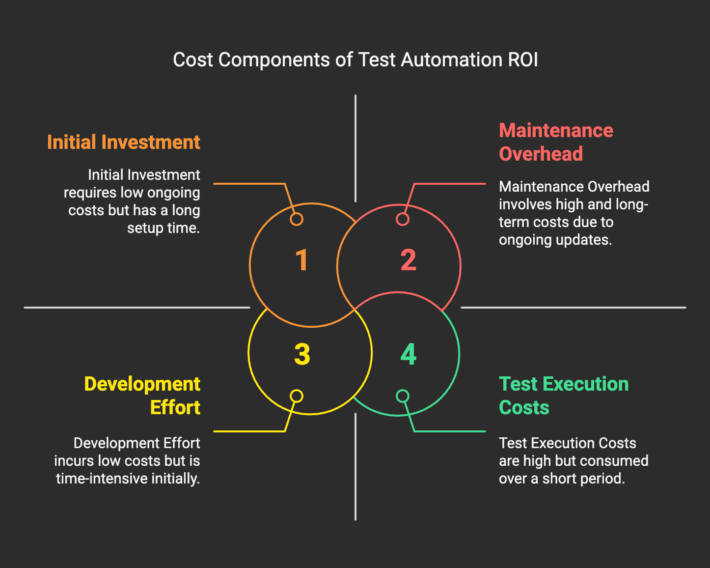

All those fancy metrics are pointless if AI costs more than it saves. Track:

Cost per defect found (AI vs. Traditional): Play the long game, as this might get worse before it gets better.

Opportunity cost: What could your team be doing if not bogged down in manual drudgery AI can solve?

9- Don’t Just Measure, ACT!

Metrics are your dashboard, not the destination. Here’s how to turn data into improvement:

Set realistic baselines: AI won’t be perfect out of the gate. Measure what you have now to see progress clearly.

Track trends, not just snapshots: A single bad day doesn’t equal doom. Look for patterns over weeks/months.

Communicate relentlessly: Show the team why these metrics matter and how they impact the product (and their lives!)

Final Pro Tip: AI Metrics Are A Moving Target This field is young! The “perfect” AI testing KPIs might change fast. Be ready to adapt: Stay plugged in: Testing blogs and conferences are full of cutting-edge ideas for measurement. Collaborate: Share what works (and what doesn’t!) with other teams on this AI journey.

Conclusion

If you’re still hungry for more, there are always deeper dives to be done on specific metrics. But this should get you off to a fantastic start in harnessing the power of KPIs to guide and supercharge your AI-driven software testing revolution!